AR Face tracking with IOS is easier then ever. With the recent upgrades to the technology, Apple has made this AR easily accessible to all developers.

For reference for this tutorial we will be using a repo created by Vladimir Cezar. https://github.com/professorcezar/ar-tracker1

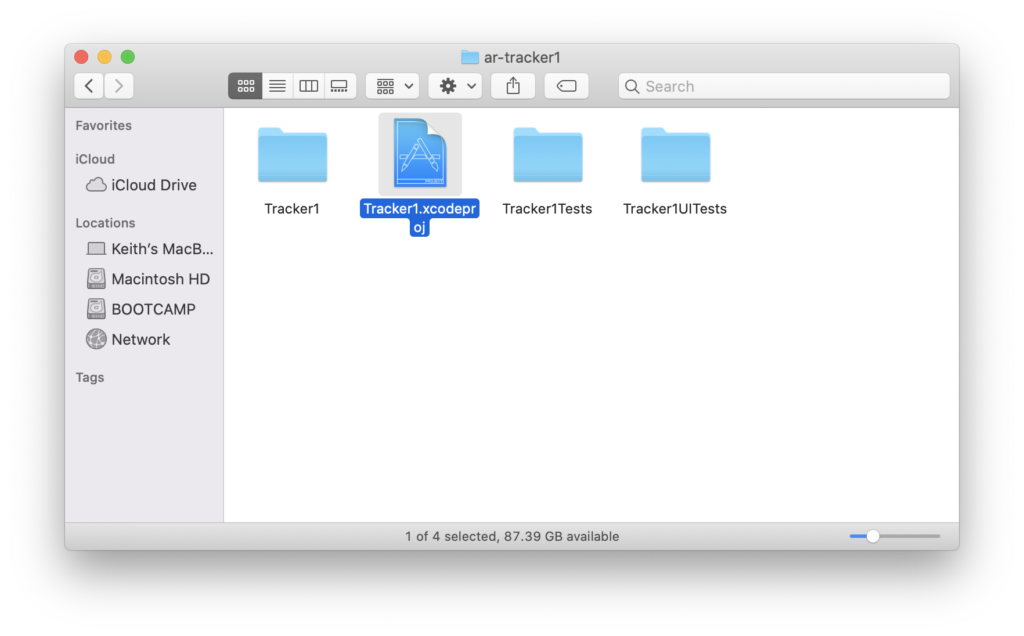

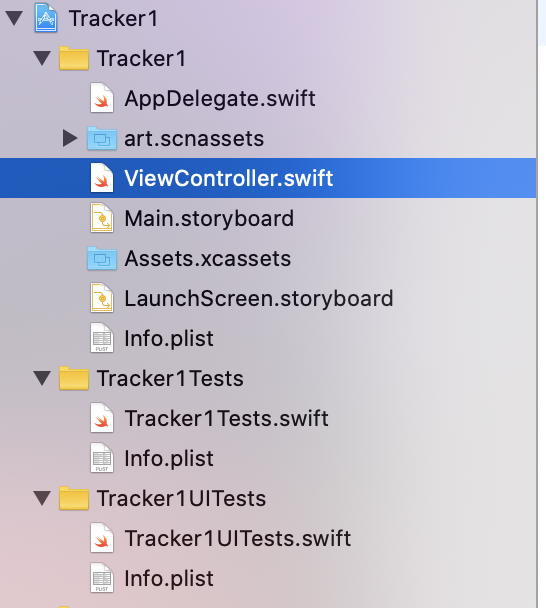

First clone or download these files and then run the Tracker1.xcodeproj file to follow this tutorial.

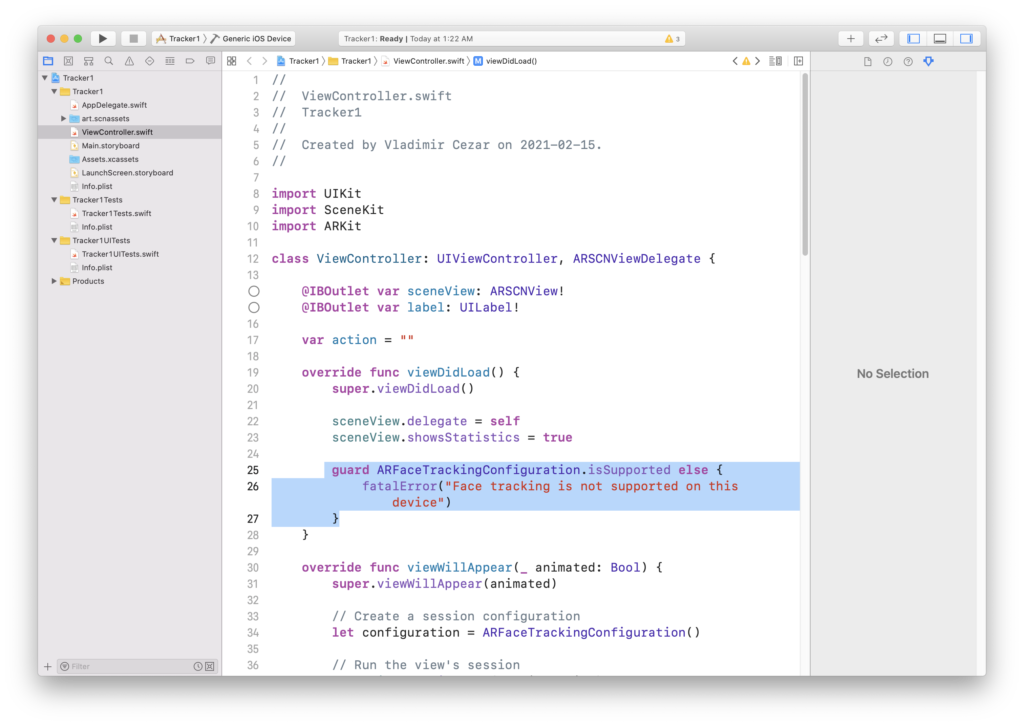

As you can see we start by checking to see if AR face tracking is supported on the device. This will be essential as not all devices/iOS versions have the ability to do AR face tracking.

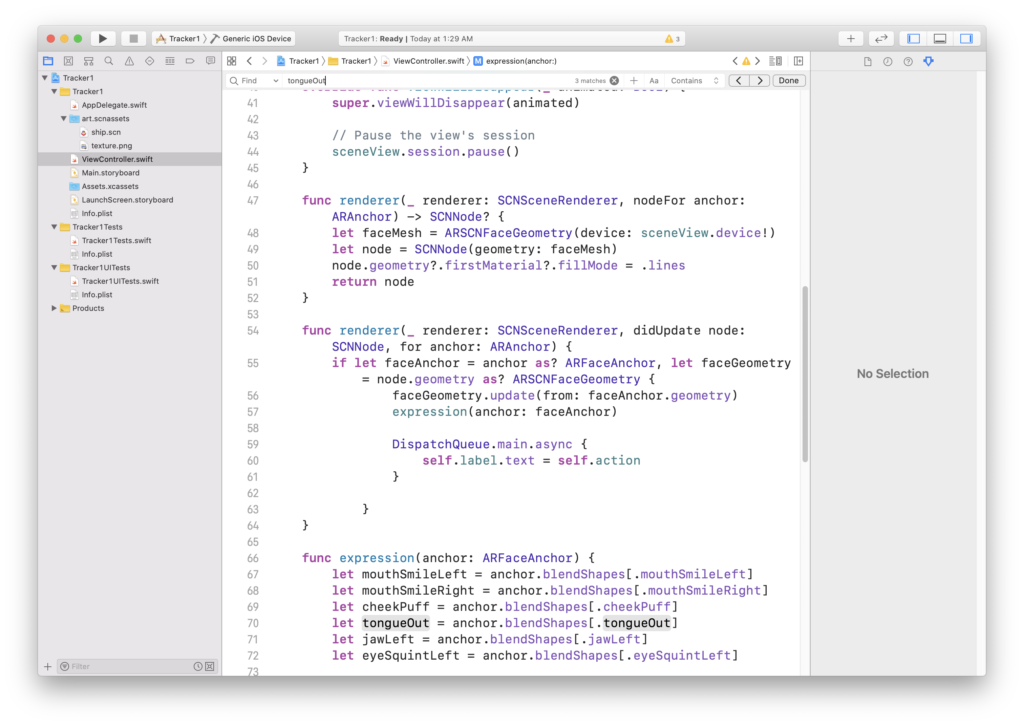

Looking at our render functions in the same file we can see that we create an ARFaceAnchor and use it as an anchor for detecting a face within the scene. When it detects a face it will then call the expression function to see what expression the face is making.

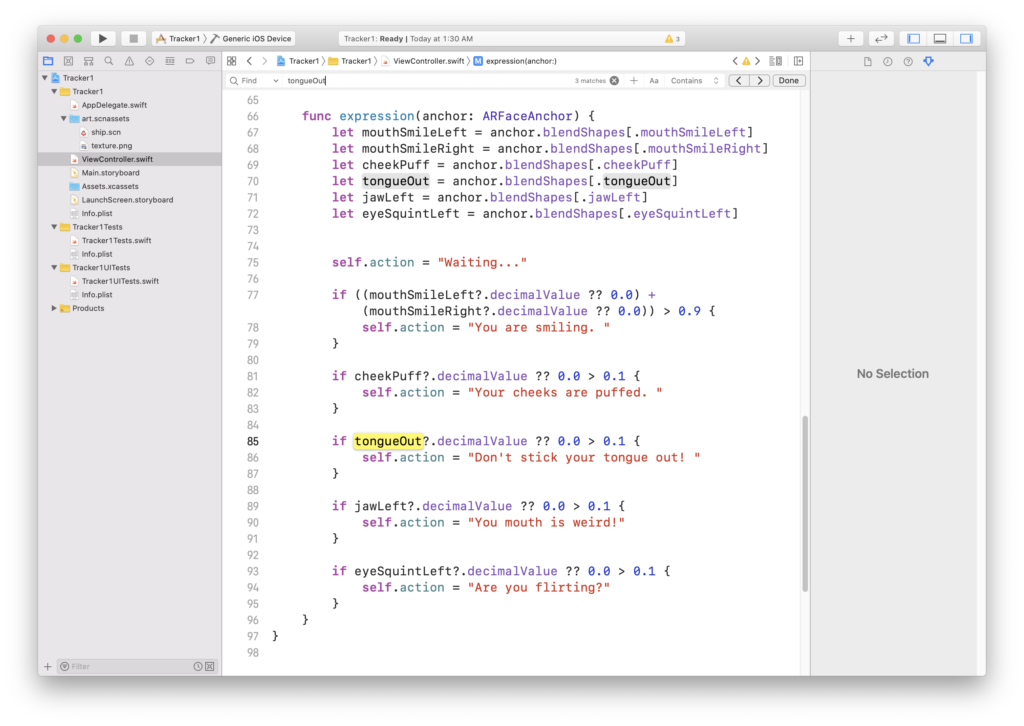

In the expression function we can see that it is used to detect different facial expressions. This function has all the code you need for adding/changing different responses for different expressions.

Each expression you make can run code! You can make it log to the console, make text appear on screen, and even make different 3D models appear in AR. Your imagination is the limit.

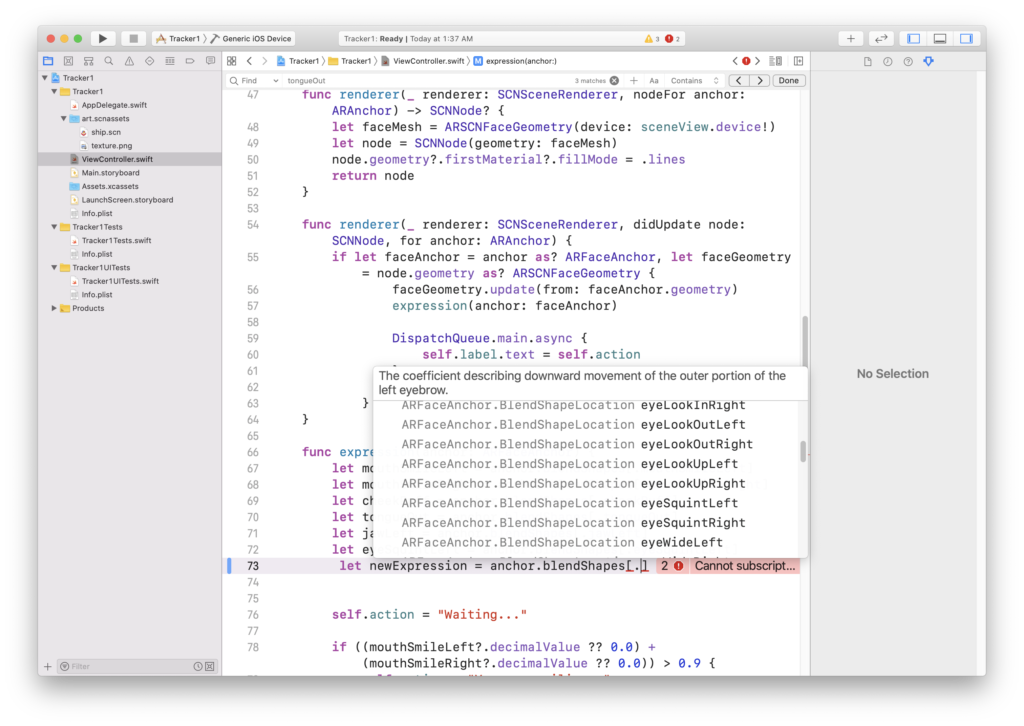

If you want to add more expressions to detect, it’s simple! Using the ARFaceAnchor library you can access a bunch of premade facial detections that you can then use to run code.

Hopefully you learned something new about AR technology with IOS. If you created anything using my tutorial please send me a email at keithgardz@live.com. I would love to see what you created!